Are you using Large Language Models (LLMs) such as ChatGPT, CoPilot, Gemini, etc.?

My friend recently expressed privacy concerns regarding people using AI Chat assistants like ChatGPT in the workplace and sharing business information with them. Is there an option to use similar tools offline without having to share information online?

The answer is YES, and the solution is to use LLMs locally.

This article is catered to business owners, employees, content creators, and anyone interested in AI writing with privacy in mind.

Problem with Online AI Writing Tools

AI writing tools are increasingly popular, but they raise valid concerns about privacy. Traditional AI writing services often involve user data, which can be a cause for worry. However, there is a solution that allows you to run large language models (LLMs) locally on your own machine, ensuring privacy at every step.

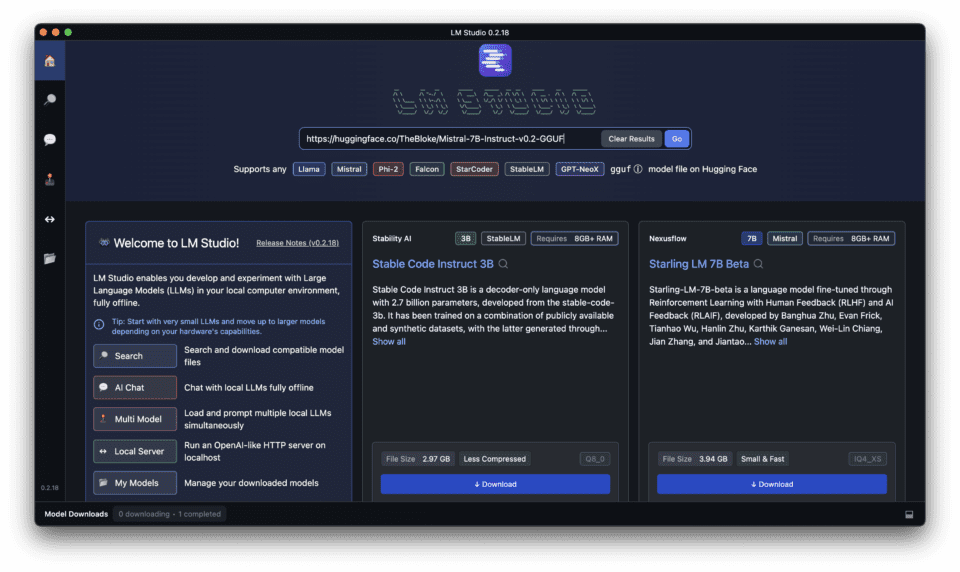

Introducing LM Studio for Running LLMs Locally

The Advantages of Local LLMs over Cloud-Based Solutions

Running large language models (LLMs) locally on your computer offers several advantages over cloud-based solutions, including local and open-source benefits.

- Privacy and Control: Keeping your data on your own machine ensures that you have full control over your information.

- Faster Response Times: Running LLMs locally eliminates the need for internet connectivity, resulting in faster response times.

- Cost-Effective: Local LLMs can be more cost-effective in the long run, as you don’t need to pay for cloud services.

Ensuring Privacy and Control with LM Studio

LM Studio is designed to provide privacy and control when running LLMs locally. It offers offline operation, no cloud dependency, and open-source models, ensuring that your data stays within your control, emphasizing its local and open-source advantages.

How LM Studio Stands Out from Other Local LLM Platforms

LM Studio differentiates itself from other local LLM platforms by offering a user-friendly interface, compatibility with various devices, and a strong community of developers and users.

Download and Install LM Studio: Step by Step

Follow the below comprehensive tutorial to download, install, and run local LLMs with LM Studio on your PC or laptop

Setting Up an Efficient Local Inference Server with LM Studio

Learn how to set up an efficient local inference server with LM Studio for faster response times and improved performance.

LM Studio offers a user-friendly way to run large language models (LLMs) locally on your computer. Here’s what you need to know for an efficient setup:

1. System Requirements:

Before downloading and installing LM Studio, ensure that your computer with LM Studio meets the following requirements.

LM Studio works best with decent hardware resources, particularly a high amount of VRAM. Here’s a general guideline:

- Operating System: Compatible with Windows, Mac, and Linux.

- GPU: Support for various GPUs, including RTX, M1, and AMD.

- Processor: Multi-core processor (i5 or equivalent) for smoother performance.

- RAM: Minimum 8GB RAM is recommended, with 16GB+ ideal for larger models.

- Storage: Enough free space to accommodate the chosen LLM (models can range from a few GB to tens of GB).

- Internet Connection: A stable internet connection is required for downloading the software.

2. Installation:

To download LM Studio, follow these steps:

- Visit the LM Studio website.

- Click on the “Download” button.

- Choose the appropriate version for your operating system.

Choosing the Right Model for Your Needs

3. Choosing an LLM:

LM Studio offers a variety of LLMs to choose from, each with different capabilities and sizes. Consider your specific writing needs and choose the most suitable model.

- LM Studio offers a variety of open-source LLMs. Consider these factors:

- Model Size: Larger models offer more capabilities but require more resources.

- Focus: Some models specialize in specific tasks like writing or code generation.

- Quantization: Look for “quantized” versions of models. These are smaller and run faster with minimal accuracy loss.

The below table compares some of the different LLM models available in LM Studio, highlighting their capabilities and ideal use cases

If you need a detailed and comprehensive list of models, check out this article where I have compared the Top 15 Large Language Models including the Open Source ones with their size, country of development, etc. Please check it to learn more.

| Model Name | Size | Capabilities | Ideal Use Cases |

|---|---|---|---|

| Mistral | Small | Basic text generation, simple NLP tasks | Quick drafts, idea generation |

| Ollama | Medium | Advanced text generation, moderate NLP tasks | Content creation, moderate complexity analysis |

| ExLlamaV2 | Large | High-quality text generation, complex NLP tasks | Detailed articles, complex data analysis |

| Gemini | Extra Large | State-of-the-art text generation, highly complex NLP tasks | Research papers, advanced content creation |

4. Downloading the LLM:

- Once you’ve downloaded LM Studio, within LM Studio, search for and download the desired LLM. The download time can vary depending on the model size and your internet speed.

Running Your First Local Inference

5. Starting the Local Inference Server:

- Once the LLM is downloaded, navigate to the Server tab within LM Studio.

- Click “Start Server” to initiate the local inference server. This process might take a few moments depending on the model size.

6. Optimizing Efficiency:

- Match Model to Needs: Choose an LLM that aligns with your specific tasks. Don’t go for a giant model if you only need basic writing assistance.

- Batch Processing: Consider sending multiple prompts at once for some models, which can be more efficient than individual requests. (Consult LM Studio documentation for specific model capabilities).

- Resource Monitoring: Keep an eye on your system resource usage while running the server. Adjust LM Studio settings or choose a smaller model if it strains your machine.

Additional Resources:

- LM Studio Documentation provides detailed information on available models, configuration options, and troubleshooting tips.

How to Use LM Studio for Natural Language Processing Tasks

Unleashing LM Studio’s Power for Natural Language Processing Tasks

Discover how LM Studio can be used for various natural language processing tasks, from content creation to data analysis.

LM Studio shines in tackling various Natural Language Processing (NLP) tasks, empowering you with AI assistance without compromising privacy. Here’s how to leverage its capabilities:

1. Text Generation:

- Craft compelling marketing copy, product descriptions, or social media posts by providing prompts or outlines for LM Studio to elaborate on.

- Brainstorm creative content ideas by feeding it starting lines and letting the model generate variations.

- Generate different writing styles by adjusting your prompts and experimenting with different LLMs.

2. Text Summarization:

- Provide lengthy articles or research papers to LM Studio, and it can generate concise summaries, saving you valuable reading time.

- Summarize customer reviews or feedback reports to quickly grasp key points and identify trends.

3. Machine Translation:

- Translate text from one language to another with LM Studio’s translation-focused LLMs. While some may not be on par with dedicated translation services, they can be a handy tool for basic needs.

4. Text Classification:

- Train an LLM to categorize your data based on specific criteria. For example, classify customer support emails by issue type or sentiment.

- This functionality can be helpful for organizing large datasets or automating basic filtering tasks.

5. Textual Entailment:

- Determine if a specific statement logically follows from another using LM Studio’s reasoning capabilities.

- This can be useful for tasks like analyzing legal documents or identifying inconsistencies in text.

6. Question Answering:

- Train an LLM on a specific knowledge base and use it to answer your questions in a natural language format.

- This can be helpful for creating internal FAQs or chatbots for customer service, leveraging the power of LLMs like ChatGPT.

Utilizing LM Studio for NLP Tasks:

- Choose the Right LLM: Select an LLM specifically trained for the NLP (Natural Language Processing) task you wish to perform. Documentation will often indicate the model’s strengths.

- Craft Effective Prompts: Provide clear and concise prompts that guide the LLM towards the desired outcome, similar to interacting with ChatGPT. The better your prompts, the more accurate the results.

- Experiment and Iterate: Don’t be afraid to experiment with different prompts and LLM settings. It’s an iterative process to achieve optimal results, often requiring adjustments in the UI to fine-tune the app’s behavior.

- Refine and Post-Process: The LLM’s output might require some polishing or human oversight for specific tasks. Factor in review and editing for accuracy, especially for critical tasks.

Remember:

- While LM Studio offers impressive NLP capabilities, it’s still under development. Results may vary depending on the complexity of the task and the chosen model.

Advanced Features of LM Studio: Enhancing Your Local LLM Experience

Exploring Advanced Settings and Options in LM Studio

LM Studio offers advanced settings and options to enhance your local LLM experience, including:

- Quantization and Optimization Techniques: These techniques can improve response times and reduce resource usage.

- Integration with Other Applications and APIs: LM Studio can be integrated with various applications and APIs for seamless use.

LM Studio: Compatibility, Support, and Community

Ensuring Compatibility: LM Studio Support for Windows, Mac, Linux, and Various GPUs

LM Studio is compatible with various operating systems and GPUs, ensuring that it can be used by a wide range of users.

Getting Help: Leveraging the LM Studio Community and Support Channels

If you need assistance with LM Studio, consult the community or support channels for helpful resources and answers to your questions.

Contributing to the LM Studio Project: Open-Source Development

If you’re interested in contributing to the LM Studio project, explore the open-source development opportunities and join the community of developers.

Frequently Asked Questions (FAQs)

What are the LM studio requirements for running locally on Mac and Windows?

To use an LLM locally through the free and powerful LM Studio app, your Mac or Windows computer must meet specific requirements. For both operating systems, LM Studio requires at least 8GB of RAM, though 16GB is recommended for optimal performance. On Windows, it demands Windows 10 or newer, and on macOS, you should be running macOS 10.14 (Mojave) or newer. Additional requirements include roughly 400MB of storage space to accommodate the app and selected local models, and for advanced use cases, a compatible GPU for GPU acceleration is suggested.

How do I download and install LM Studio on my computer?

Downloading and installing LM Studio is straightforward. Begin with step 1 by visiting the official LM Studio website or a trusted repository like GitHub or HuggingFace to download the app for your operating system (macOS or Windows). For step 2, locate the downloaded file in your Downloads folder and double-click to begin the installation process. Follow the on-screen instructions for step 3, which involves agreeing to the terms of service and selecting an installation location. Once the installation completes, you can launch LM Studio from your applications list or start menu.

How do I choose a model to download in LM Studio for local use?

To choose a model to download for use in LM Studio, start by launching the app and connecting to the internet. Navigate to the ‘Models’ section where you’ll see a list of available LLMs such as Mistral, Ollama, and Mixtral. Consider your specific needs, like whether you’re looking for a model optimized for language understanding (Mistral), creative content generation (Ollama), or custom tasks (Mixtral). Hover over your chosen model to see more details and click ‘Download’ to begin. Note that models can take a while to download depending on their size and your internet speed.

How do I start a chat session using my downloaded LLM in LM Studio?

After successfully downloading and setting up an LLM locally in LM Studio, starting a chat session is simple. Open LM Studio, and in the main menu, select the ‘Chat’ feature. From there, choose the downloaded model you wish to use for the session. You’ll be taken to a chat interface where you can type your queries or prompts. Hit ‘Enter’ or click ‘Send’ to submit your text, and the LLM will generate a response based on the input, displaying it in the chat window. This feature can be used for various interactions, from getting writing assistance to code debugging.

Can I use LM Studio for generating transcripts locally?

Yes, LM Studio supports generating transcripts locally using LLMs optimized for speech recognition tasks like Mistral. To generate a transcript, ensure you’ve downloaded a suitable model for transcription. Open the app and select the ‘Transcription’ option, then choose your model. You can upload audio files directly into LM Studio, and the selected LLM will process the audio and output a text transcript. This can be particularly useful for users who require quick, offline access to transcription services for meetings, interviews, or content creation.

What is quantization in LM Studio, and how does it improve performance?

Quantization is a process used in LM Studio to optimize model performance by reducing the precision of the numbers used within the model. This technique allows the app to run larger models on devices with limited resources by decreasing the overall size of the model and speeding up computation, especially beneficial for devices without dedicated GPU acceleration. It balances performance and quality, making it possible to use advanced local models on a wider range of computers. Users can enable quantization from the settings menu in LM Studio, selecting the level of quantization that best suits their balance between performance and accuracy.

Is it possible to integrate local LLMs from LM Studio with external apps via API?

Yes, LM Studio offers API integration for the local models you’ve set up, allowing developers to extend the capabilities of their external apps or services by leveraging the power of large language models (LLMs) locally. This feature supports a variety of use cases, from enhancing chat applications with natural language processing to incorporating advanced text analysis into content management systems. To access the API, navigate to LM Studio’s settings and enable API usage. You’ll be provided with an API key and endpoint information to integrate the LLM into your application.

How can I ensure the best performance when using GPU acceleration in LM Studio?

To ensure the best performance with GPU acceleration in LM Studio, first verify that your computer’s hardware includes a compatible GPU with adequate resources. Nvidia GPUs are commonly supported for these tasks. Next, update your GPU drivers to the latest version to ensure compatibility and optimal performance. Within LM Studio, navigate to the settings and ensure GPU acceleration is enabled. For specific tasks or models, you may also find additional settings for optimizing performance, such as adjusting the memory allocation for the model or selecting performance-oriented quantization options. Monitoring the GPU’s performance and temperature can also help in fine-tuning the settings for sustained operation.