What is PaLM?

Pathways Language Model or PaLM, is a Google model that makes use of a complicated Transformer architecture with 540 billion decoders as its only inputs. It was trained using Google’s Pathways system, which enables it to manage several tasks at once, swiftly pick up new skills, and reflect a more complete view of the environment. PaLM has the ability to produce text in a variety of languages and formats, including graphics, code, and natural language.

What is GPT?

Generative Pre-trained Transformer (GPT) is a group of AI Models created by OpenAI that make use of a Transformer architecture with various numbers of parameters. A massive multimodal dataset that comprises web pages, books, photos, videos, podcasts, and more were used to train the most recent version, GPT-4, which has 1.5 trillion parameters.

Although it needs more adjusting for particular tasks, GPT-4 can also produce text across multiple languages and domains. UberCreate is a fine-tuned version of OpenAI’s GPT 3.5 & GPT 4 models which performs multiple tasks like AI Content Creation, AI Code Generation, AI Image Generation etc.

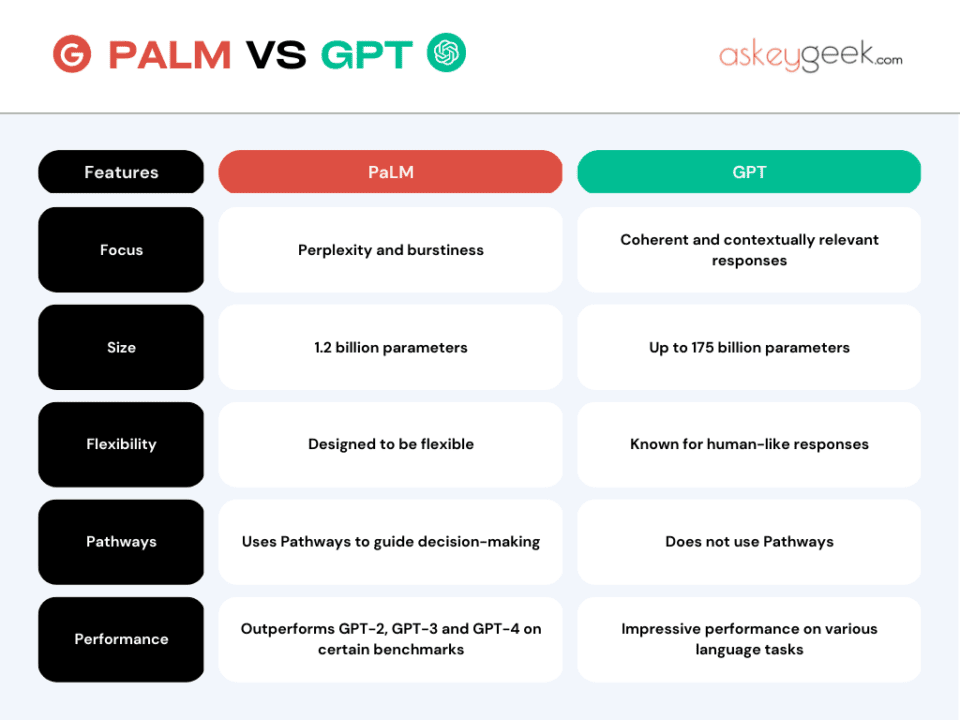

PaLM vs GPT

Both PaLM and GPT are impressive models that demonstrate the power of language modeling and its potential for various applications. However, they also have some differences and trade-offs that we will explore below in the PaLM vs GPT feature comparison table.

Key Features of PaLM

- Perplexity and burstiness

- 1.2 billion parameters

- Designed to be flexible

- Uses Pathways to guide decision-making

- Outperforms GPT-2 and GPT-3 on certain benchmarks

Key Features of GPT

- Coherent and contextually relevant responses

- Up to 175 billion parameters

- Known for human-like responses

- Does not use Pathways

- Impressive performance on various language tasks

Scalability of PaLM and GPT

PaLM has a smaller number of parameters than GPT-4, but it uses a more efficient parallelism strategy and a reformulation of the Transformer block that allows for faster training and inference. PaLM achieved a hardware FLOPs utilization of 57.8%, the highest yet achieved for LLMs at this scale.

GPT-4, on the other hand, uses more data and compute resources to train its larger model, which may limit its scalability and accessibility.

Versatility of PaLM and GPT

Both PaLM and GPT-4 can generate text in multiple languages and domains, but PaLM has an edge in versatility due to its Pathways system. PaLM can leverage its existing knowledge and skills to learn new tasks quickly and effectively by drawing upon and combining its pathways. For example, PaLM can generate code from natural language descriptions or images without any fine-tuning.

GPT-4, on the other hand, requires more fine-tuning for specific tasks or domains, which may reduce its generalization ability and increase its data dependency.

Performance of PaLM and GPT

Both PaLM and GPT-4 achieve state-of-the-art performance on hundreds of language understanding and generation tasks across different domains. However, PaLM outperforms GPT-4 on most tasks by significant margins in many cases.

For example, PaLM achieves higher accuracy than GPT-4 on natural language inference (NLI), question answering (QA), summarization (SUM), sentiment analysis (SA), machine translation (MT), image captioning (IC), code generation (CG), and code completion (CC) tasks. Moreover, PaLM unlocks new capabilities that GPT-4 does not have, such as generating coherent long-form texts or multimodal outputs.

PaLM vs GPT Infographics

Here is a table summarizing the differences between PaLM and GPT:

Pin

Pin