The Ultimate guide to popular large language models

Imagine you’re struggling to pen the perfect story, your fingers hovering over the keyboard as the blank page taunts you. Suddenly, you remember your secret weapon: a large language model (LLM). With a few prompts, the LLM weaves a tale so captivating, it feels like magic. This is just one example of the power of LLMs, sophisticated AI systems that are reshaping the way we interact with technology.

In the ever-changing and intriguing world of Artificial Intelligence (AI), large language models (LLMs) have been making waves with their impressive capabilities in handling human language. But what exactly are these LLMs, and how do they revolutionize our daily interactions and tasks? Let’s delve into this captivating realm and uncover some of the most prominent LLMs shaping the future of AI.

Understanding Large Language Models

LLMs are advanced machine learning models that predict and generate human-like text. They can autocomplete sentences, translate languages, and even craft entire articles. These models have evolved from simple word predictors to complex systems capable of handling paragraphs and documents.

How Do Large Language Models Work?

LLMs estimate the likelihood of sequences of words, using vast datasets to learn language patterns. They are built on architectures like Transformers, which focus on the most relevant parts of the input to process longer sequences efficiently.

The Evolution of LLMs: From BERT to GPT-4

Examplesof Large Language Models in Action

ChatGPT, a variant of OpenAI’s GPT models, has become a household name, powering chatbots that offer human-like interactions.

BERT’s Impact on Natural Language Processing Tasks

BERT has significantly improved the performance of natural language processing tasks, such as sentiment analysis and language translation.

GPT-3 and the Frontier of Text Generation

GPT-3’s ability to generate creative and coherent text has opened up new possibilities in content creation and beyond.

A Cheat Sheet to Today's Prominent Large Language Models Comparison

To better grasp the magnitude and diversity of large language models, let’s take a closer look at some of the most influential publicly available models currently dominating the landscape. Each model brings unique strengths and excels in various use cases.

This table provides a quick overview of some of the most influential large language models as of 2024. BERT, introduced by Google, is known for its transformer-based architecture and was a significant advancement in natural language processing tasks. Claude, developed by Anthropic, focuses on constitutional AI, aiming to make AI outputs helpful, harmless, and accurate. Cohere, an enterprise LLM, offers custom training and fine-tuning for specific company use cases. Ernie, from Baidu, has a staggering 10 trillion parameters and is designed to excel in Mandarin but is also capable in other languages.

Local LLMs vs Cloud LLMs

While cloud-based LLMs offer impressive capabilities, a growing trend is the use of local inference with open-source models. Tools like LM Studio allow users to run run LLMs locally directly on their machines.

This approach prioritizes privacy by keeping all data and processing offline. However, local inference typically requires more powerful hardware and may limit access to the most cutting-edge models due to their size.

Top 15 Popular Large Language Models

| Model Name | Size (Parameters) | Open Source? | Last Updated (Estimated) | Company | Country of Development |

| AI21 Studios Jurassic-1 Jumbo | 178B | Yes | December 2022 | AI21 Studios | Israel |

| Google Gemma | 2B or 7B | Yes | May 2023 | Google AI | United States |

| Meta LLaMA 13B | 13B | Yes | Early 2023 | Meta AI | United States |

| Meta LLaMA 7B | 7B | Yes | Early 2023 | Meta AI | United States |

| EleutherAI GPT-J | 6B | Yes | May 2023 (through forks like Dolly 2) | EleutherAI (research group) | United States |

| The Pile – EleutherAI | 900GB Text Data | Yes | Ongoing development | EleutherAI (research group) | United States |

| Mistral AI – Mistral Large | Not publicly disclosed (Large) | Open-source with paid options | September 2023 | Mistral AI | France |

| Falcon 180B | 180B | Yes | Not specified | Technology Innovation Institute | UAE |

| BERT | 342 million | No | July 2018 | Google AI | United States |

| Ernie | 10 trillion | No | August 2023 | Baidu | China |

| OpenAI GPT-3.5 | 175B | No | Late 2022 | OpenAI | United States |

| Claude | Not specified | No | Not specified | Anthropic | United States |

| Cohere | Not publicly disclosed (Massive) | No | Ongoing Development | Cohere | Canada |

| Google PaLM (research focus) | Not publicly disclosed (Likely very large) | No | Under Development | Google AI | United States |

| OpenAI GPT-4 | Not publicly disclosed (Successor to GPT-3.5) | No | Under Development | OpenAI | United States |

LLMs Country Of Development Comparison

When comparing the top 15 Large Language Models (LLMs), the United States contributes nearly 67% of the LLM development market share by 10 out of 15.

| Country of Development | Number of Models |

| Canada | 1 |

| China | 1 |

| France | 1 |

| Israel | 1 |

| UAE | 1 |

| United States | 10 |

| Grand Total | 15 |

LLM Architectures and Training Methods

| Architecture/Method | Description |

|---|---|

| Transformer | A neural network architecture that relies on attention mechanisms to improve the efficiency and accuracy of processing sequential data. It’s the foundation of many modern LLMs. |

| Pre-training | The initial stage of training an LLM, exposing it to a vast amount of unlabelled text data to learn the statistical patterns and structures of language. |

| Fine-tuning | Refining a pre-trained model by training it on specific data related to a particular task, enhancing its performance for that task. |

| QLoRA | A method involving backpropagating gradients through a frozen, 4-bit quantized pre-trained language model into Low Rank Adapters (LoRA), enabling efficient fine-tuning. |

The transformer architecture has revolutionized the field of natural language processing by enabling models to handle long sequences of data more effectively. Pre-training and fine-tuning are critical stages in the development of LLMs, allowing them to learn from vast amounts of data and then specialize in specific tasks. QLoRA represents an advanced technique for fine-tuning LLMs, reducing memory demands while maintaining performance

Key Use Cases for Large Language Models

How LLMs Revolutionize Language Translation and Sentiment Analysis

LLMs have transformed language translation by understanding and translating vast amounts of data, while sentiment analysis has become more nuanced thanks to their deep learning capabilities.

Enhancing Human-Machine Interactions with Chatbots

Chatbots powered by LLMs offer personalized and efficient customer support, changing the face of customer service.

Transforming Content Creation Through Generative AI

Generative AI models like GPT-3 have made it possible to create high-quality content quickly, aiding writers and designers alike.

Challenges and Limitations of Implementing LLMs

Addressing Concerns Around Bias and Ethical Use

The training data for LLMs can introduce biases, raising ethical concerns that must be addressed.

Understanding the Computational Costs of Training Large Models

Training LLMs requires significant computational resources, which can be costly and environmentally unsustainable.

The Limitations in Language Understanding and Context Grasping

Despite their capabilities, LLMs still struggle with understanding context and subtleties of human language.

How Large Language Models are Trained and Fine-Tuned

The Importance of Vast Amounts of Data in Pre-Training LLMs

LLMs require large datasets to learn a wide range of language patterns and nuances.

Fine-Tuning Techniques for Specific Applications

Techniques like transfer learning and fine-tuning with transformer models are used to adapt LLMs to specific tasks.

Emergence of Foundation Models in Machine Learning

Foundation models are a new trend in machine learning, providing a base for building specialized models.

Differences between pre-training and task-specific training

Pre-training and task-specific training (often referred to as fine-tuning) are two critical phases in the development of large language models (LLMs). These stages are foundational to how LLMs understand and generate human-like text, each serving a distinct purpose in the model’s learning process.

Pre-training LLMs

Pre-training is the initial, extensive phase where an LLM learns from a vast corpus of text data. This stage is akin to giving the model a broad education on language, culture, and general knowledge. Here are the key aspects of pre-training:

- General Knowledge Base: The model develops an understanding of grammar, idioms, facts, and context by analyzing a large corpus of text. This broad knowledge base enables the model to generate coherent and contextually appropriate responses.

- Transfer Learning: Pre-trained models can apply their learned language patterns to new datasets, especially useful for tasks with limited data. This ability significantly reduces the need for extensive task-specific data.

- Cost-Effectiveness: Despite the substantial computational resources required for pre-training, the same model can be reused across various applications, making it a cost-effective approach.

- Flexibility and Scalability: The broad understanding obtained during pre-training allows for the same model to be adapted for diverse tasks. Additionally, as new data becomes available, pre-trained models can be further trained to improve their performance.

Task-Specific Training (Fine-Tuning)

After pre-training, models undergo fine-tuning, where they are trained on smaller, task-specific datasets. This phase tailors the model’s broad knowledge to perform well on particular tasks. Key aspects of fine-tuning include:

- Task Specialization: Fine-tuning adapts pre-trained models to specific tasks or industries, enhancing their performance on particular applications.

- Data Efficiency and Speed: Since the model has already learned general language patterns during pre-training, fine-tuning requires less data and time to specialize the model for specific tasks.

- Model Customization: Fine-tuning allows for customization of the model to fit unique requirements of different tasks, making it highly adaptable to niche applications.

- Resource Efficiency: Fine-tuning is particularly beneficial for applications with limited computational resources, as it leverages the heavy lifting done during pre-training.

In summary, pre-training equips LLMs with a broad understanding of language and general knowledge, while fine-tuning tailors this knowledge to excel in specific tasks. Pre-training sets the foundation for the model’s language capabilities, and fine-tuning optimizes these capabilities for targeted applications, balancing the model’s generalization with specialization.

The Future of Large Language Models

Anticipating Next-Generation LLMs: GPT-4 and Beyond

The next generation of LLMs, like GPT-4, is expected to push the boundaries of what’s possible in AI even further.

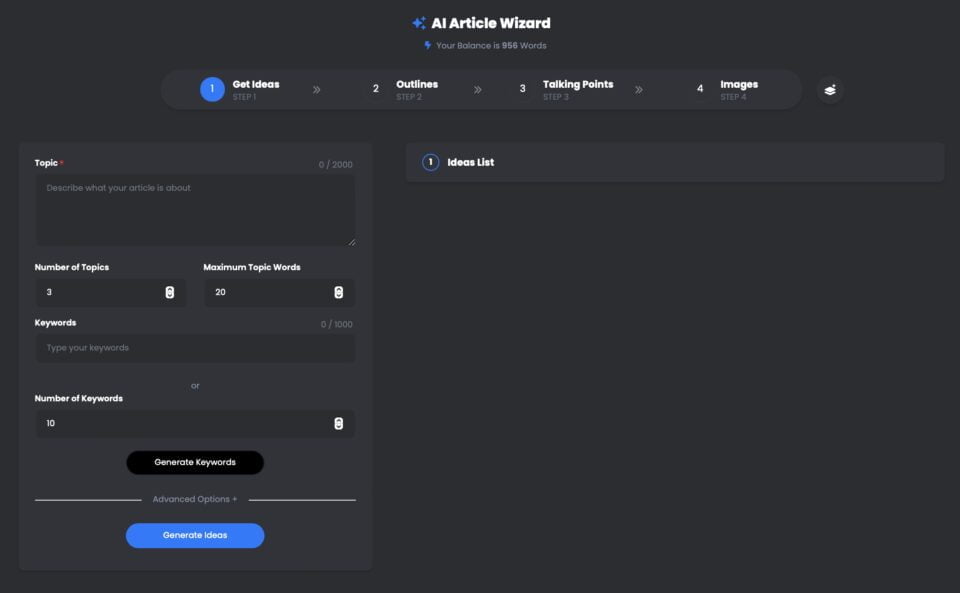

UberCreate AI Article Wizard is a powerful tool that leverages OpenAI GPT-4 large language model (LLM) to generate high-quality articles in minutes.

With UberCreate, you can say goodbye to writer’s block and hello to a detailed article in minutes. You just need to provide a topic, a keyword, and a target word count, and UberCreate will take care of the rest. It will generate an article outline, talking points, relevant images, and a final article that is ready to publish.

UberCreate AI Article Wizard Using GPT-4

Pin

Pin UberCreate uses advanced artificial intelligence technology to create content that is original, engaging, and informative. It can write about any topic, from business and marketing to health and lifestyle. It can also adapt to different tones, styles, and formats, depending on your preferences and needs.

UberCreate is not just a content generator, but also a content enhancer. It can help you improve your existing articles by adding more details, facts, and images. It can also check your grammar, spelling, and readability, and suggest ways to optimize your content for SEO and social media.

UberCreate is the only AI content creation tool you will ever need. It combines 17 AI tools in one, including a blog post generator, a social media content generator, a visual content generator, and more. It is designed to facilitate every aspect of content creation, from ideation to production.

Whether you are a blogger, a marketer, a student, or a professional, UberCreate can help you save time, money, and effort in creating high-quality content. You can try it for free and see the results for yourself.

Expanding the Boundaries of Human-AI Collaboration

LLMs are set to enhance collaboration between humans and AI, making interactions more natural and productive.

These prominent LLMs are just the tip of the iceberg when it comes to understanding the vast potential of large language models in revolutionizing our interactions with technology and expanding the boundaries of human-AI collaboration. Stay tuned for Part II of this series, where we’ll dive deeper into the capabilities of large language models, their applications in various industries, and the challenges that come with harnessing their power.

Prospects of Natural Language Understanding in the Decade Ahead

The future looks bright for natural language understanding, with LLMs becoming more sophisticated and integrated into various applications.

In conclusion, LLMs like BERT, GPT-3, and their successors are revolutionizing industries, from education to healthcare. As we continue to harness their power, we must also navigate the challenges they present, ensuring their ethical and responsible use. The journey into the world of large language models is just beginning, and the possibilities are as vast as the datasets they learn from. Dive into this exciting field, and let’s shape the future of AI together.

Frequently Asked Questions (FAQs)

What is a Large Language Model (LLM) in the context of NLP?

A Large Language Model (LLM), within the scope of Natural Language Processing (NLP), refers to an advanced AI system designed to understand, interpret, and generate human-like text. These models are trained on large amounts of data, enabling them to perform a wide range of language tasks. Through the process of training, the model learns to predict the next word in a sentence, helping it to generate coherent and contextually relevant text on demand.

What are the different types of LLMs available in 2024?

As of 2024, there are several different types of Large Language Models available, each with unique capabilities. The most notable ones include models such as GPT-4, which is known for its generative text abilities and Bard, which is Google’s counterpart focusing on a broad range of NLP tasks. These models differ in the number of parameters, data they were trained on, and their specific applications, ranging from simple text generation to complex language comprehension tasks.

How are LLMs trained on large datasets?

LLMs are trained using vast datasets collected from the internet, including books, articles, and websites. This extensive training process involves feeding the model large amounts of text data, which helps the model to identify patterns, understand context, and learn language structures. The training process can take weeks or even months, depending on the model’s size and the computational resources available. The objective is to enable the model to generate text that is indistinguishable from that written by humans.

Can you explain the applications of LLMs in everyday tasks?

LLMs can be used in a variety of applications to simplify and automate everyday tasks. This includes chatbots and virtual assistants for customer service, content creation tools to generate articles or reports, and translation services for converting text between languages. Other applications involve sentiment analysis to gauge public opinion on social media, summarization tools to condense long documents into shorter versions, and even coding assistants to help programmers by generating code snippets. Essentially, LLMs have revolutionized how we interact with technology, making it more intuitive and human-like.

How do the capabilities of large language models differ from traditional models?

Large Language Models significantly outperform traditional models in several ways. Firstly, due to their extensive training on diverse datasets, LLMs can generate more coherent, varied, and contextually appropriate responses. They are better at understanding nuances in language and can handle sequential data more efficiently. Moreover, the sheer number of parameters in LLMs enables more sophisticated reasoning and predictive capabilities compared to traditional models, which were more limited in scope and scalability. Ultimately, LLMs offer a more nuanced and versatile approach to processing and generating language.

What challenges are associated with developing and deploying LLMs?

Developing and deploying LLMs present several challenges, including the computational resources required for training, which can be substantial. Additionally, there are concerns regarding bias in the training data, which can lead the model to generate prejudiced or harmful content. Privacy issues also arise from the sensitivity of the data used in training. Furthermore, the interpretability of these models poses a challenge, as their decision-making process is complex and not always transparent. Lastly, the environmental impact of the energy-intensive training process is a growing concern.

How do language models like GPT-4 and Bard impact the field of NLP?

Models such as GPT-4 and Bard have significantly advanced the field of Natural Language Processing by demonstrating unprecedented performance on a wide range of NLP tasks. Their ability to generate text, understand context, and produce human-like responses has set new standards for what AI can achieve in understanding and producing language. These models have not only enhanced the quality and efficiency of applications like chatbots, content generation, and language translation but have also opened new avenues for research and development in NLP, pushing the boundaries of AI capabilities.

Is there a beginner’s guide to understanding and working with LLMs?

Yes, for those new to the field, a beginner’s guide to large language models can be incredibly helpful. Such a guide typically covers the basics of what LLMs are, how they’re trained, and their applications. It may offer insights into the most significant models in 2023, explain the underlying technology, and provide examples of NLP tasks that can be performed with LLMs. Starters can look for online resources, tutorials, and courses that offer an introduction to these concepts, helping to build a foundational understanding of how LLMs work and how they can be utilized in various projects.